The story of my Macaulay Culkin Christmas (thus far)! I’ve been updating on Insta and it’s linked meta platforms.

I’d been toying with the idea of getting out of my regular reach for the holidays, but come the Fri 19th Dec when I was putting the mountain of projects on hold, and saying goodbye to friends departing long term. I finally got to 11pm and said “enough”.

Within 48hrs, incl. one final neurodivergent bookclub, and a Christmas themed D&D game with some close friends, I’d recovered enough mental functioning to make a plan. 10 hrs prior to boarding the plane I booked my ticket out and a place to stay when I landed.

I have had an overarching need is to start repair on my brain. 2025 was the hardest year yet. Just when I’d thought that burnout had hit it’s core and I am at my last reserve I found myself in another layer, and in myself another layer of resilience. I could feel my sense of self being stripped away as I lost the more confidence, and watched my skills evaporate as if I was in the final chapters of my own personal Flowers for Algernon. It made sense to go to my upside-down – where the weather was cold, my expected outcome was not failure, and people called me friend.

The flight out had a hicup but I’m used to that now, and what would have been a problem in the past is now a bit of business.

I landed at Newark Airport NYC and made my way to my excellent tiny hotel Pod51. The rooms are Piet Mondrian themed! I braved the chill and found a pretty jumping Pizza store on 2nd Av blaring Latin American dance music for a few partying folks. Was a good vibe.

I escaped with 2 slices bigger than my head(!), got back into my room by midnight, watched local news and had pizza in bed. SUCCESS!

Since then Jordan at the pizza store will slip me an extra slice of some garlic bread when I go. Great guy.

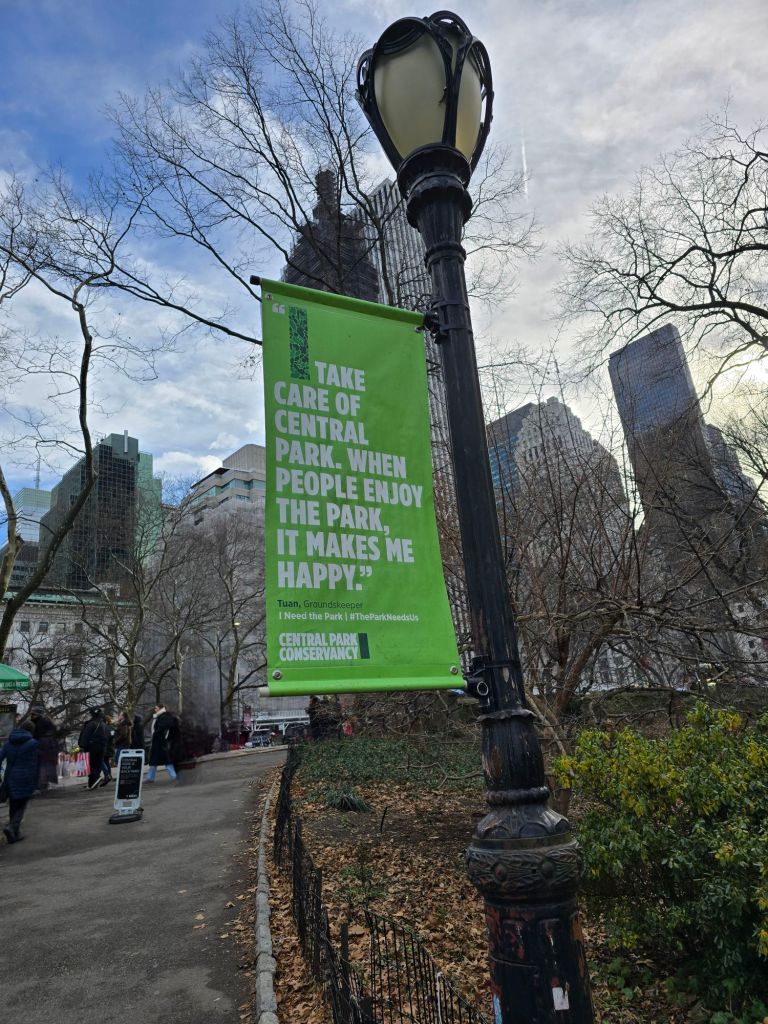

Next day I caught up with my friend Dale @ Beyond Sushi for great vegan Sushi lunch, before his expert tour of Midtown & Central Park West.

I took myself to Macy’s to buy myself a gift, for Christmas Day then Bryant Park skating rink. Then home to prepare to foil the sticky bandits. The gift is more pants for the cold – is up or in wearing two pairs. I freaked out a little the first time I went to the bathroom and forgot. I thought I’d turned into Ken.

After spending Christmas day wandering through Central Park, finding a diner in the Upper East side NYC and generally being a badly organised tourist (by design!) I was treated to a play Marjorie Prime (by 2nd Stage) by my friend Dale. While we were eating all the Tofu at Ollies Szechwan I happened to glance out the window and it was snowing!? I’ve never seen snow in my 39yrs (and some months*) existence so this was pretty AuDHD brain popping. We had a great conversation on the issues with AI, and a wonderful show in an incredible theatre. Adding a Christmas snowfall definitely lightened the ToDo column of the bucketlist.

*177

I’m very grateful to Dale who’s been exceptionally patient with my poor planning and touristy excitement as I repair my broken brain on this trip. Also he keeps me eating well – difficult to do solo when burnt out.